The ROI Trap: Why the "Mathematician’s Proof" Kills AI Investment

Dmitry Borodin

11/3/20255 min read

We, as executives and business leaders, are hardwired to seek certainty. We demand predictability in our investments, and we reward solutions that come with a guarantee - a proof that they'll work.

But when it comes to modern product development, particularly with complex AI and machine learning systems, that very demand for certainty is the single biggest risk to our strategic investments.

My professional journey - from the world of pure mathematics, where elegance and logical rigor are everything, to the messy reality of building successful global AI products - taught me a crucial, counter-intuitive lesson: A perfect proof is often an imperfect strategy.

To succeed, we must swap the logical mindset of the Mathematician for the empirical agility of the Physicist. This isn't academic jargon; it is the difference between a successful, sustained product and a massive write-off.

In this article, we'll cover:

The critical difference between an elegant theorem and an adaptive strategy

The forces (Data Distribution, Data Drift, Edge Cases) that kill "proven" AI solutions

The organizational shift: How to stop budgeting for Proof and start funding Learning Loops

Why leaders must demand Adaptation, not fragile perfection

1. The Fundamental Conflict: Fixed Proof vs. Adaptive Strategy

The core mistake organizations make is treating a new AI solution like a solved equation rather than a live experiment.

❌ The Mathematician's Mindset: The Pursuit of Fixed Proof

This approach prioritizes internal elegance and reduction. When a new problem appears, the mathematician's instinct is to find a known, similar problem and apply the existing, "proven" solution.

The Goal: Find the most elegant proof that the system should work based on the logic or past success ("We can reuse this model; it worked on the last project, right?").

The Outcome: A sense of permanence. The solution is considered done and universally true, eliminating the need for ongoing validation.

The Risk: Blind faith. This approach systematically ignores variables outside the defined system - like changes in the market, user behavior, or data quality - which, in the real world, inevitably leads to critical failure post-launch.

✅ The Physicist's Mindset: The Commitment to Empirical Validation

This approach prioritizes external performance and adaptation. When a new phenomenon appears (a new product launch, a new market), the physicist treats it as a unique system that must be tested under real conditions.

The Goal: Treat the ML model or product feature as a hypothesis that must be validated by reality.

The Outcome: A strategy of adaptation. The solution is understood to be valid only for a specific context and timeframe, making continuous feedback mandatory.

The Benefit: Risk Mitigation. By building testing and learning loops into the operational framework, the system is designed to identify and pivot based on inevitable real-world failures, sustaining its value over time.

2. The Production Gap: Why AI Amplifies the Risk

If you're building traditional, rule-based software, you can sometimes survive the mathematician's mindset. The rules are explicit, and the outcomes are predictable. But in the age of data-driven products, and especially with AI/ML/LLM systems, the approach becomes an existential threat to your investment.

AI and machine learning, by their very nature, introduce variables that are completely outside the control of the initial project plan. They force us into the physicist's lab whether we want to go there or not.

The Three Unpredictable Forces that Kill 'Proven' Solutions:

Context is King: The Data Distribution Trap A mathematical proof holds universally. An ML model does not. A model trained on user data in market A (e.g., high-income, urban users) may be considered "proven" with 95% internal accuracy. But when deployed in market B (e.g., lower-income, rural users), the model fails because the data distribution -the very landscape it was built to navigate - has fundamentally changed. Believing the model's success is portable is a costly mathematical fallacy.

The Hidden Cost of Time: Data Drift Unlike a theorem, which remains true forever, the real world is constantly shifting. Data drift refers to the change in data characteristics over time. User preferences evolve, market conditions flip, and competitors react. A model perfectly validated last quarter will slowly but surely degrade this quarter because the data flowing into it no longer matches the training data. The mathematical mindset sees a solution as permanent; the reality is that its value is perishable.

The Unforeseen Variable: Real-World Edge Cases The most dangerous failures are caused by scenarios that only emerge with large-scale deployment. Your testing environment is a simulation; production is the messy, complex reality. These edge cases- unusual user inputs, rare sensor readings, or unexpected combinations of events - are the equivalent of gravity not behaving as expected in an experiment. If you don't build systems designed to learn from these surprises, your initial investment will rapidly destabilize.

The executive takeaway is clear: Investing in an AI project without mandating the physicist’s approach to continuous validation is not a cost-saving measure - it is a guarantee that the system's return on investment will peak quickly and then accelerate its decline toward worthlessness.

3. The Strategic Mandate: Leading the Cultural Shift

The shift from the mathematician's mindset to the physicist's mindset is not a technical choice; it is a strategic and cultural mandate that must be led from the top. When an organization rewards only the proof (the launch date) and punishes the experiment (the pivot), it guarantees long-term product failure.

To build adaptive intelligence systems that sustain their value - rather than fragile, fixed solutions that quickly decay - leaders must fundamentally change how they allocate resources, measure success, and define project closure.

A. Stop Budgeting for Proofs; Start Funding Learning Loops

Traditional project budgeting allocates resources toward a fixed deliverable: the final, proven solution. This creates organizational pressure to declare the problem "solved" prematurely.

The strategic alternative is to treat the initial deployment as the end of Phase 1 (the Hypothesis Build) and budget significant, sustained resources for Phase 2 (The Validation and Adaptation Loop).

Actionable Budget Shift: Ensure budgets include specific allocations for post-launch monitoring, A/B testing in production, model retraining pipelines, and the engineering time required to rapidly implement findings from the real world. If you only fund the build, you’re not funding the value.

B. Re-Engineer Your Metrics: From Launch to Longevity

We need to stop rewarding teams solely for "launching on time" or achieving "100% test coverage" (metrics of internal proof). We must reward performance that reflects market reality.

The Metric of the Mathematician: High training accuracy; successful migration of data; on-time deployment.

The Metric of the Physicist: Sustained Business Performance in production (e.g., Conversion Rate uplift, Reduction in false positives, ROI maintained 6 months post-launch); Speed of model Retraining and Redeployment (Time to Adapt).

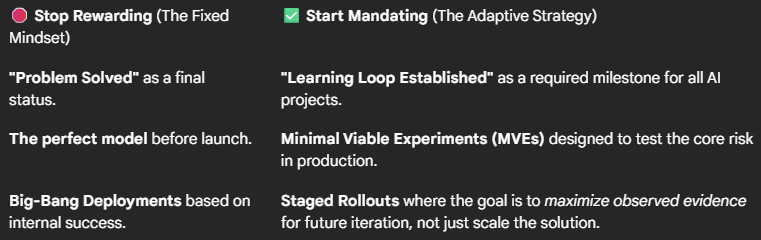

C. The New Rules of Engagement

To make this cultural shift stick, we must institutionalize the physicist's approach:

This mandate shifts your organization from being reactive - scrambling when a proven system fails - to being proactively adaptive, mitigating risk and sustaining value by design.

4. The Bottom Line: Demand Adaptation, Not Proof

The most sophisticated AI model in the world is still just a very complex hypothesis.

As leaders, we must retire the notion that a product - especially one driven by dynamic data - can ever be "proven correct" by logic alone. Its true value is validated empirically, demonstrated daily by its performance in the wild.

The next time your team presents a solution, ask yourself:

Does this feel like an elegant, fixed theorem based on a historical data set? (The Mathematician's Proof)

Or does this feel like an adaptive, iterative experiment built to learn from the unpredictability of the market? (The Physicist's Strategy)

Embracing the physicist's mindset - Hypothesize, Test, Learn, Refine, and Sustain - is not just good product management; it is a fundamental strategy for risk mitigation and securing a lasting return on your innovation investments. It’s the essential shift from building fragile perfection to building robust resilience.

The Conversation Starts Here:

Examine your product portfolio and budget allocations today. What is your organization's biggest blind spot when moving from "Proof" to "Practice"?

#BeyondThePilot #AIStrategy #Leadership #RiskManagement #ProductManagement